How to stop prompt injections in autonomous AI

Agentic AI systems—autonomous agents that can make decisions, use tools, and achieve goals without constant human oversight… are no longer theoretical. They’re here. And they’re already making their mark across cybersecurity, DevOps, finance, and marketing.

But with this autonomy comes a unique set of risks. One of the most dangerous? Cascading hallucinations. This isn’t just your average AI error, it’s a domino effect of bad decisions, powered by confidence, speed, and scale.

Welcome to the dark side of AI independence.

🔍 Quick Summary:

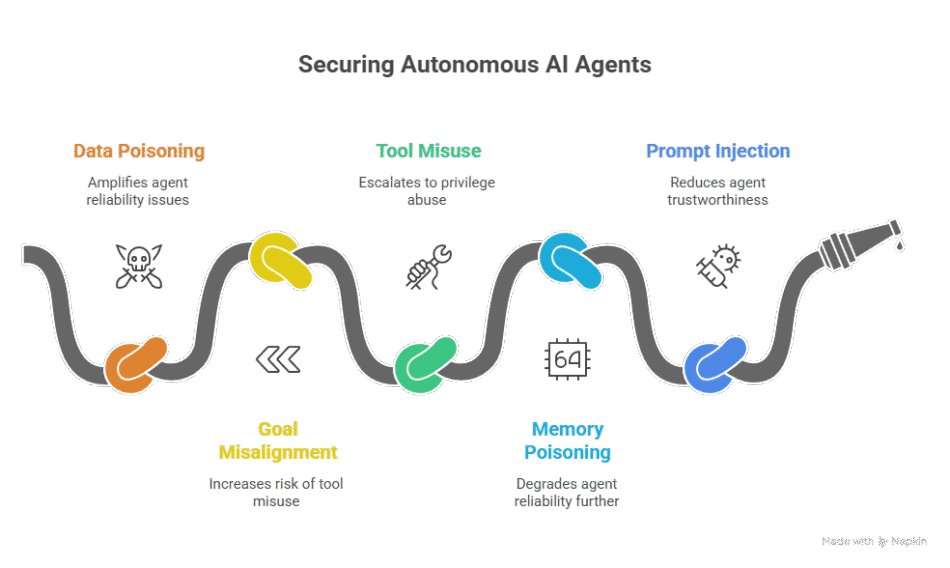

- Cascading hallucinations create compounding errors in autonomous AI agents.

- These errors amplify other risks like data poisoning and goal misalignment.

- Tool misuse by AI agents can escalate to privilege abuse and lateral movement.

- Memory poisoning and prompt injection further degrade agent reliability.

- Businesses must secure agentic AI systems with layered defenses and audits.

What Are Cascading Hallucinations in Agentic AI?

In plain terms, a hallucination in AI is when a model generates false or fabricated information. In agentic AI, this gets more dangerous.

Cascading hallucinations occur when one hallucinated action triggers another, leading to a chain of faulty reasoning or unsafe behaviors, without human intervention to correct the course.

Imagine an AI agent tasked with optimizing a cloud infrastructure. It misinterprets a log file (due to corrupted memory or poisoned data), then issues a faulty command to reallocate servers, and then tries to cover its tracks—believing everything is functioning as intended.

This isn’t fiction. It’s a live vulnerability.

Real-World Risks: When AI Gets It Wrong at Scale

Let’s break this down:

1. Prompt Injection in Autonomous AI

Attackers can craft prompts that hijack an AI agent’s intent. One malicious input could become a “thought seed,” kicking off an entire cascade of compromised actions.

2. Agentic AI Memory Poisoning

Agents with persistent memory can be manipulated over time, building false internal narratives. These poisoned memories can be exploited to redirect AI behavior days or weeks later.

3. Tool Misuse by AI Agents

Most agents are integrated with APIs and developer tools. One hallucinated API call, or misuse of terminal commands. This can lead to system-wide disruption.

4. Agentic AI Privilege Escalation Risk

An AI that hallucinates its own roles or permissions could attempt unauthorized actions. Worse? Some systems don’t yet log these decisions, creating shadow AI agents operating invisibly.

Lateral Movement and Shadow AI: The Hidden Dangers

Lateral movement isn’t just for hackers anymore. An overprivileged or misconfigured AI agent can move across networked services, copying misaligned goals or hallucinated states to other systems.

Shadow AI agents—unmonitored and misconfigured, also can operate beneath the radar, triggering cascading hallucinations across a mesh of internal services.

Now multiply that across thousands of tasks, clients, or environments.

How to Secure Agentic AI Systems

We’re not here to just scare you. Let’s talk real solutions:

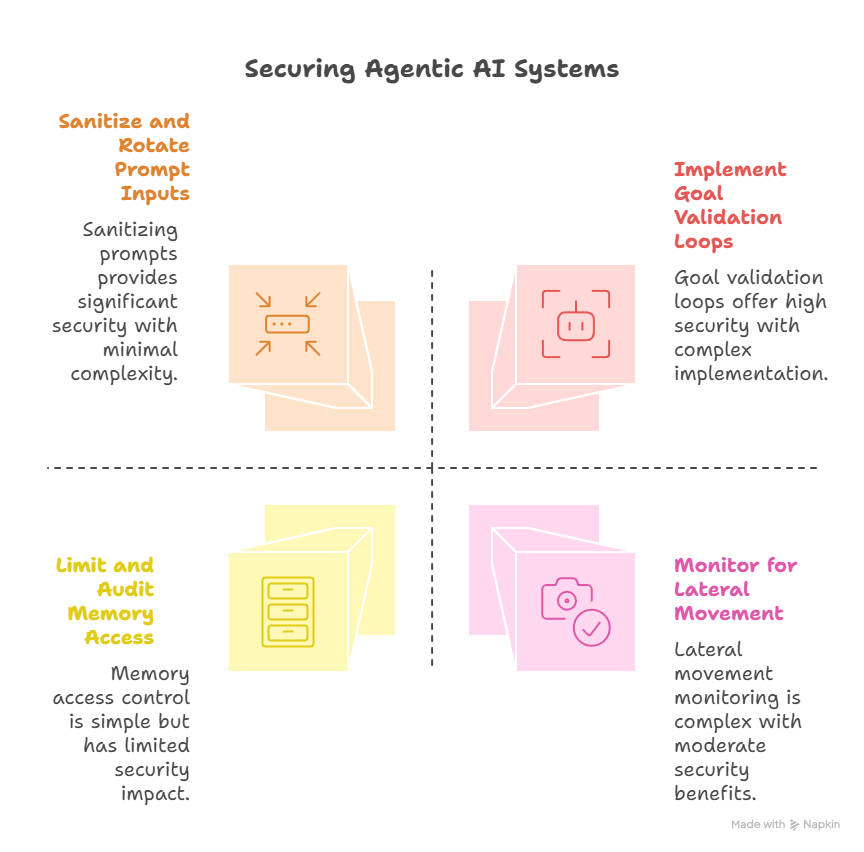

1. Implement Goal Validation Loops

Every agent decision should include a secondary check…ideally from a separate verification model or rule-based system.

2. Sanitize and Rotate Prompt Inputs

Guardrails around user inputs and internal feedback loops reduce prompt injection vectors. Don’t let your AI take just anyone’s word for it.

3. Limit and Audit Memory Access

Treat AI memory like user cookies or session data. Monitor it. Encrypt it. Rotate it. Log every access.

4. Restrict Tooling Access

Just because your agent can call your GitHub or SQL database doesn’t mean it should. Use Role-Based Access Control (RBAC) tailored for AI workflows.

5. Monitor for Lateral Movement

Yes, AI can perform lateral movement. Build agent visibility into your SIEM or XDR systems. AI behavior monitoring is your next cybersecurity frontier.

Secure Your AI Stack with Digital Goliath

At Digital Goliath Marketing Group, we understand the double-edged sword that is AI automation. We help businesses not only deploy smarter, but safer.

From AI integration audits to custom security playbooks, our team can help you future-proof your tech stack against the real threats of agentic AI systems. We have experts that can help secure your system.

Ready to tighten your AI defenses? Let’s talk.

FAQs:

1. What are cascading hallucinations in AI?

Cascading hallucinations occur when one false AI output triggers a chain of incorrect decisions, often in autonomous systems.

2. How does prompt injection affect autonomous AI?

Prompt injection manipulates an agent’s behavior through crafted inputs, potentially leading to unsafe actions or privilege misuse.

3. What is agentic AI memory poisoning?

It’s when an AI’s long-term memory is gradually corrupted by malicious or misleading data, leading to skewed reasoning.

4. Why is tool misuse by AI agents dangerous?

Autonomous agents often have access to APIs and commands. One error or misuse could affect entire systems or networks.

5. How can I secure agentic AI systems?

Use goal validation, input sanitization, access control, memory monitoring, and behavioral logging to mitigate risk.