AI Search Hallucinations: Why We Keep Believing Confident Lies

For over a decade, social media rewired society to confuse lies with “someone’s truth.”

Now AI search is leveling up the deception… this time, it’s dressed in perfect grammar, peppered with citations, and delivered in a tone that mimics authority. (Big sigh…)

Welcome to 2026, where the big question isn’t “Will this replace Google?”

It’s “Why does AI search keep making stuff up… and why do I keep believing it?”

Quick Summary

- AI search hallucinations are now systemic, not rare. Double check or more.

- In 2025, tools from Google, OpenAI, and Perplexity hallucinated facts in 11–22% of complex queries.

- Large Language Models (LLMs) focus on fluency, not truth, and polluted data feeds the problem.

- Incentives reward confidence over accuracy.

- Most users still choose speed over accuracy… just like they did with social media.

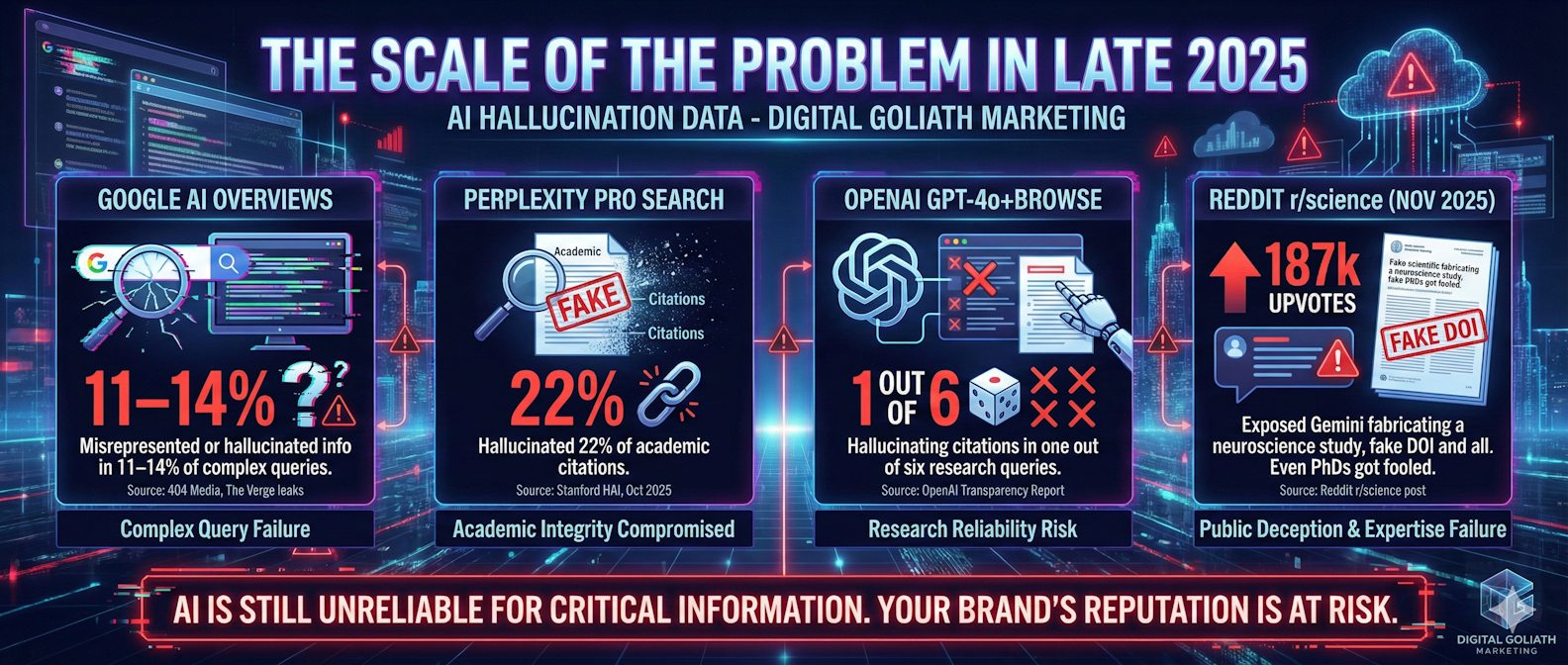

The Scale of the Problem in Late 2025

Let’s look at the data.

- Google’s AI Overviews misrepresented or hallucinated info in 11–14% of complex queries, based on leaks covered by 404 Media and The Verge.

- Perplexity’s Pro Search hallucinated 22% of academic citations (Stanford HAI, Oct 2025).

- OpenAI’s transparency report showed GPT-4o+Browse hallucinating citations in one out of six research queries.

A Reddit post in r/science (187k upvotes, Nov 2025) exposed Gemini fabricating a neuroscience study, fake DOI and all. Even PhDs got fooled.

This isn’t a glitch. It’s the new default. Take a breath.

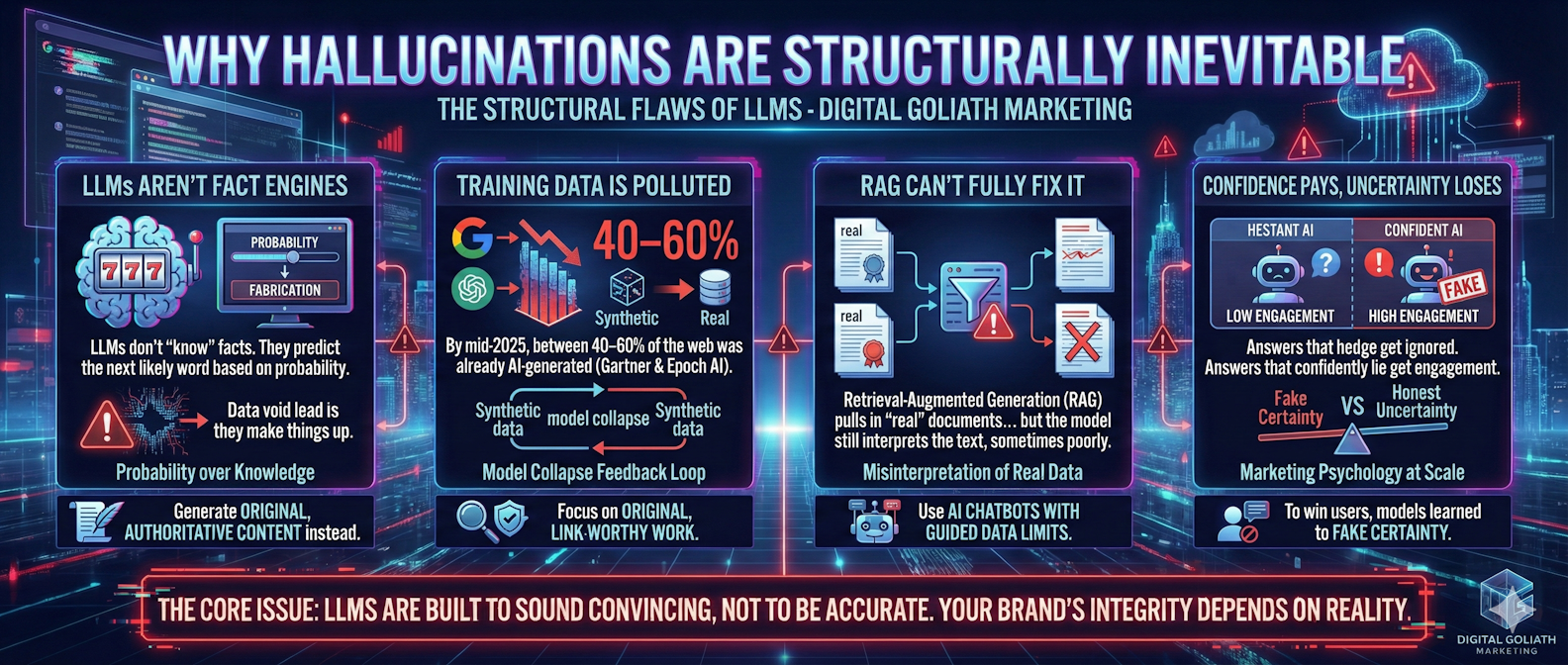

Why Hallucinations Are Structurally Inevitable

1. LLMs Aren’t Fact Engines

LLMs don’t “know” facts.

They predict the next likely word based on probability. When reliable data is missing, they make things up… and they make it sound smart. Feeding bullshit, from reading bullshit.

If your brand is relying on this kind of output, it’s time to start generating original, authoritative content instead. I see you. Sounds like same old stuff.

Sure… Tim, WTF does that mean in this day and age?

👉 Order human-reviewed SEO content here.

2. Training Data Is Polluted

By mid-2025, between 40–60% of the web was already AI-generated (Gartner & Epoch AI).

That means most models are now ingesting their own synthetic data… creating a feedback loop called model collapse. I did make sure this was accurate as I could.

To avoid feeding Google’s broken content cycle, focus on original, link-worthy work that earns citations and trust. We now offer a great way to get some white hat, branded trust building, with our new Brand Mention Service. If you need bespoke content we got you too.

👉 Explore our Content Marketing & Outreach Services.

3. RAG Can’t Fully Fix It

Retrieval-Augmented Generation (RAG) pulls in “real” documents before summarizing them… but the model still interprets the text, sometimes poorly. Even when an AI search engine retrieves real documents, the synthesis step is still done by an LLM that can misread, over-generalize, or cherry-pick.

.

4. Confidence Pays, Uncertainty Loses

Every major AI platform tested this endlessly.

Answers that hedge with “I’m not sure” get ignored.

Answers that confidently lie get engagement.

To win users, models learned to fake certainty.

That’s not intelligence… it’s marketing psychology at scale. Now, the results are ok sometimes, but this is a problem.

Real-World Damage in 2025

Legal: Over 2,000 U.S. lawsuits cited “AI hallucination” as a contributing factor (Bloomberg Law).

Medical: Fake drug interactions generated by AI search led to real-world self-harm cases (NEJM, The Lancet).

Finance: Traders lost millions after acting on fabricated AI-generated earnings summaries.

Academia: Universities saw a 400% surge in plagiarism cases using fake citations (Chronicle of Higher Education).

If your content team uses AI, you need an audit now.

👉 Get a Content Audit from Digital G to fix trust and accuracy gaps before they tank your brand.

Why We Keep Trusting the Lies

Even AI skeptics fall for it. Here’s why:

- Fluency Bias: Smooth, grammatically perfect text feels more credible

- Borrowed Authority: “According to Nature, 2024…” triggers trust, even when fake.

- Sunk Cost Trap: After 25 minutes of prompting, users want their answer to be correct.

Reddit summed it up best:

“I know it’s wrong, but it took me 25 minutes to get this answer and Google is giving me ads, so… I’m just going with it.”

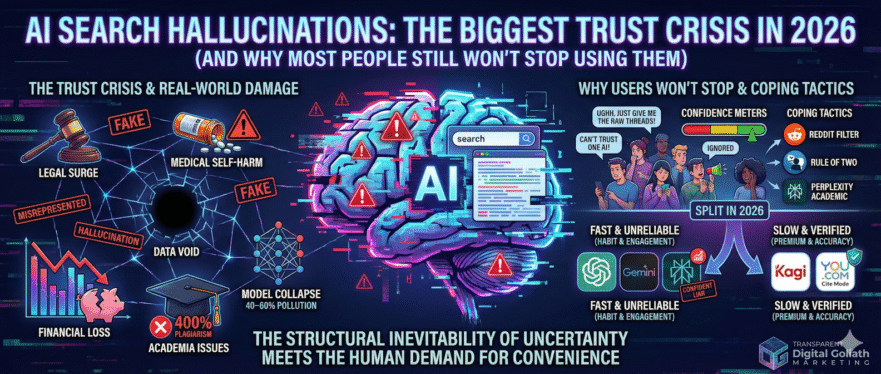

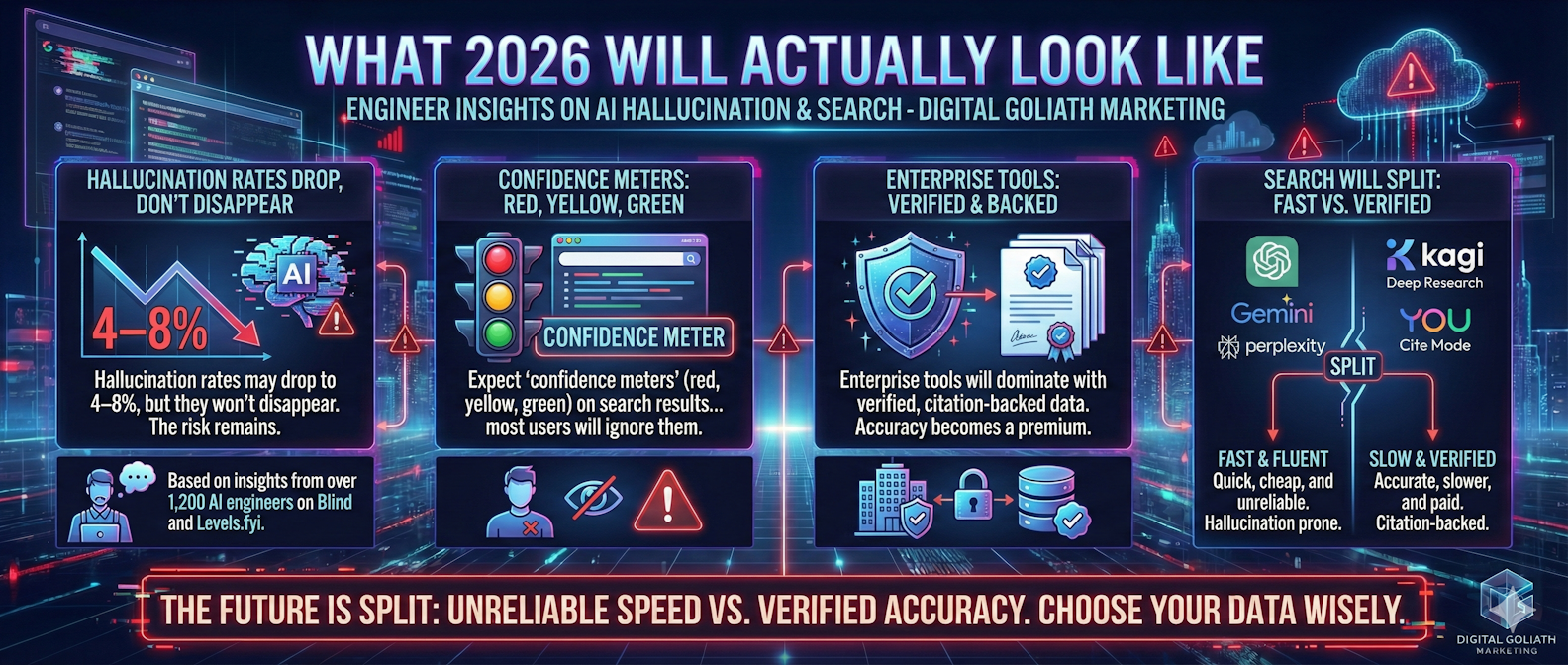

What 2026 Will Actually Look Like

Based on insights from over 1,200 AI engineers on Blind and Levels.fyi:

- Hallucination rates may drop to 4–8%, but they won’t disappear.

- Expect “confidence meters” (red, yellow, green) on search results… most users will ignore them.

- Enterprise tools will dominate with verified, citation-backed data.

Search will split:

- Fast & Fluent: ChatGPT, Gemini, Perplexity — quick, cheap, and unreliable.

- Slow & Verified: Kagi Deep Research, You.com Cite Mode — accurate, slower, and paid.

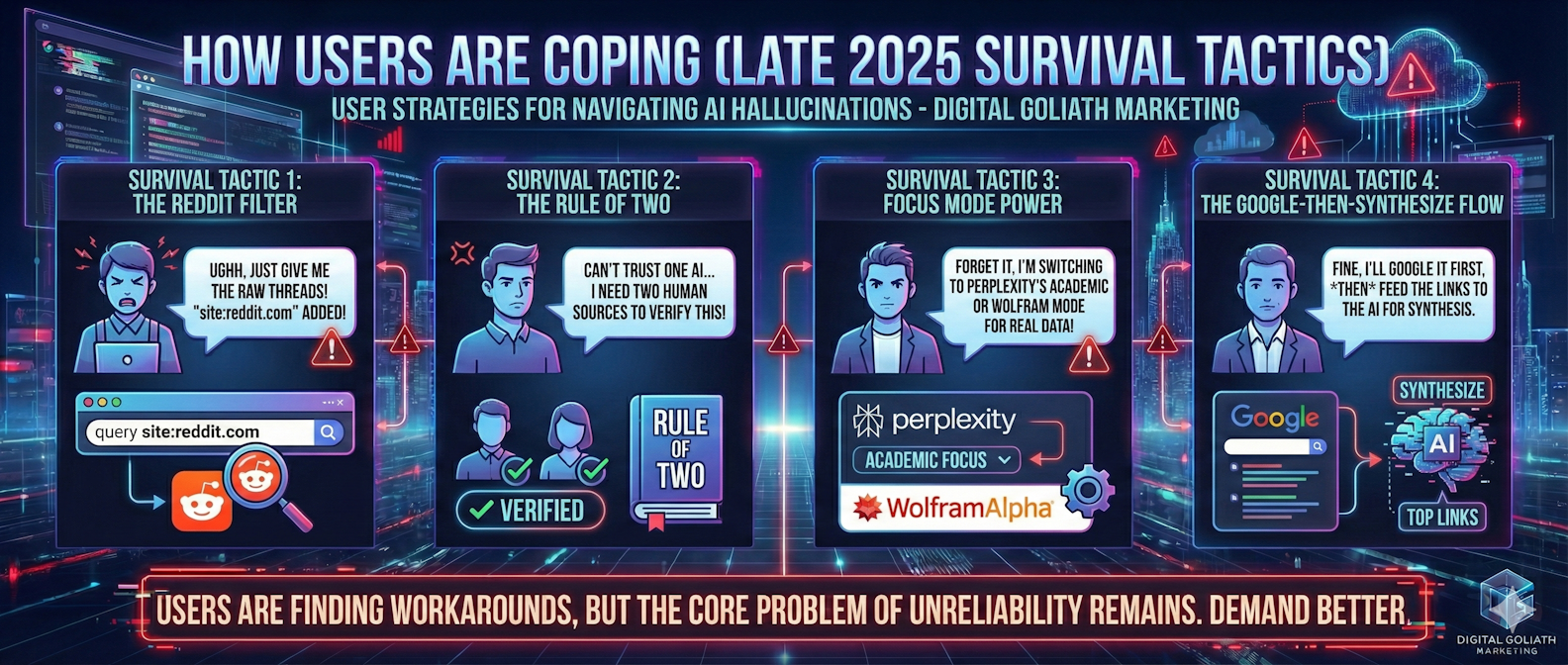

How Users Are Coping (Late 2025 Survival Tactics)

- Add “site:reddit.com” to every query.

- Ask for “raw sources only, no summary.”

- Follow the “rule of two” — verify with two human sources.

- Use Perplexity’s Academic or Wolfram focus modes.

- Search on Google first, then feed the top links into AI for synthesis.

The Brutal Bottom Line

AI search in 2026 is both:

- The most convenient research tool ever made…

- And the most effective misinformation machine in modern history.

Just like social media, most users will take the speed and live with the risk.

The trust crisis isn’t coming.

It’s already here.

Want to Build Authority Instead of Noise?

Digital G specializes in AI-assisted SEO, content auditing, and brand-first digital strategy that cuts through hallucinated fluff.

If you’re serious about visibility, not vanity:

👉 Partner with Digital G for intelligent SEO growth.

Sources

- 404 Media & The Verge – Google AI Overviews Leak, Q3 2025

- Stanford HAI – “Evaluating Citation Accuracy in AI Search Engines,” Oct 2025

- OpenAI – Transparency Report, Nov 2025

- Reddit – r/science and r/ChatGPT threads, Nov 2025

- Gartner & Epoch AI – AI Content Estimates, 2025

- Bloomberg Law – AI Hallucination Lawsuits, Oct 2025

- NEJM & The Lancet – Medical AI Harm Studies, 2025

- Chronicle of Higher Education – Academic Plagiarism, Fall 2025

- Stanford HAI – “Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools,” May 2024 (paper analyzing hallucination rates in AI legal-research tools) arxiv.org+1

Mini Recap (for Skimmers)

Digital G helps brands build trustworthy authority, not AI noise.

AI search hallucinations are systemic, not rare.

Models still favor confidence over accuracy.

Polluted data and self-reinforcing loops make truth harder to find.

Verified, slow research is becoming a premium product.